We believe, with the right encouragement, everyone can be a good conversationalist — even in the unlikeliest of places. There are millions of conversations that take place across our partners’ sites every day and in order to encourage quality content, we had to create a principles of online safety and create solutions to help our partners build thriving online communities.

As mentioned previously, we approach quality as a framework where publishers are able to set their rules and enforce them through moderation tools and their users are incentivized through exposure, rewards, and community privileges. Han, Brazeal & Pennington in their 2018 paper made a significant observation on online civility “when people are exposed to civil content, they are much more likely to create civil content themselves.” So the key to success and principles of online safety seem straightforward:

How our principles of online safety allow us to surface the best

We agreed civility is a constraint rather than something we should constantly optimize. Simply put, we want to avoid incivility, not optimize for civility. Our moderation platform helps publishers to determine their tolerance to various incivil behaviors, expose those preferences to their audience through their Community Guidelines, and enforce these guidelines with a combination of automatic tools and a partner operated manual-moderation dashboard.

Along this process we identified key metrics that will help us define the success of the moderation process as part of our principles of online safety. We call it the ‘Quality Score.’

Quality Score = Safety x Approval Rate

Safety is measured in percentages, and is essentially our error rate. When a comment is removed by moderators for breaking their Community Guidelines, we count the number of times that comment was seen by a user. Safety is the percentage of good comments exposed out of the total comments exposed. For reference, our network benchmark stands at around 95%.

The Approval rate is even simpler. It measures the percentage of comments approved to be published out of total comments made. To understand how this makes sense, take a look below:

- If the approval rate is 100% but safety is 0%, we failed (miserably).

- If the approval rate is 0% (the platform rejects every new comment), we don’t even care about where safety stands.

- If the approval rate and safety are at 100%, we’re in the optimal scenario (moderation is not needed).

This way of measuring online safety helps us stay on top of false-negatives ie. rejecting comments that in fact were aligned with the site guidelines – while making sure we minimize the exposure to comments who break them.

The third key piece which makes this whole for us is what’s considered valuable. For Value, we draw inspiration from companies like Netflix, who look at the total viewing time as their main KPI to evaluate if their audience finds their content valuable. Essentially, time is currency. You only have a finite amount, meaning if you choose to spend it in one place, you have less of it to spend elsewhere. For us, the average time people choose to spend reading conversations is a good measurement for the value they get out of it.

The final measurement in our principles of online safety is to make sure these KPIs are substantiated through an additional layer of user validation. Our Conversation Survey gathers feedback from users across our partner sites about the quality of conversations they are part of. Thousands of people help us improve our tools by participating in an in-conversation survey presented to them daily.

Quality earns reach, building role models

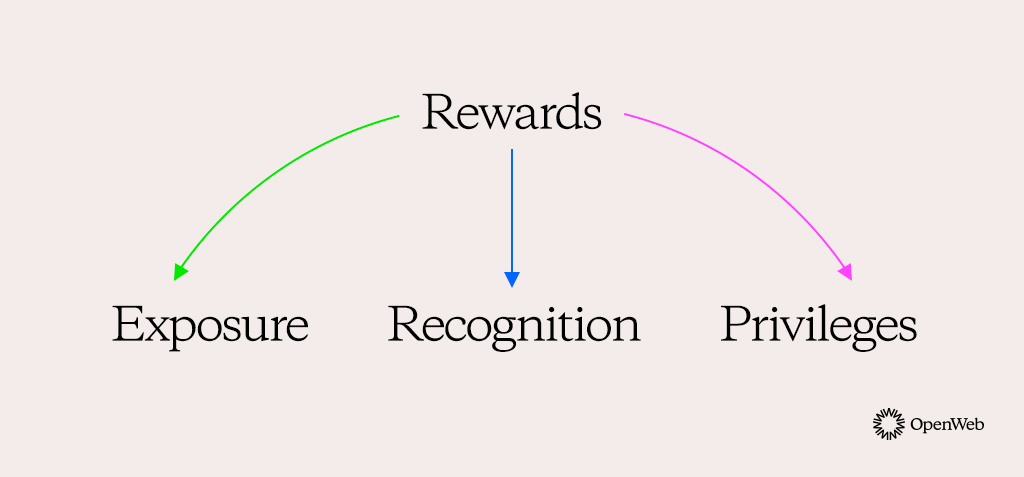

True to the age-old belief that positive reinforcement breeds positive results, our reputation mechanism rewards and incentivizes quality content and contributors to build positive role models in the community. They hold the key to dictating community norms and change other users’ behavior.

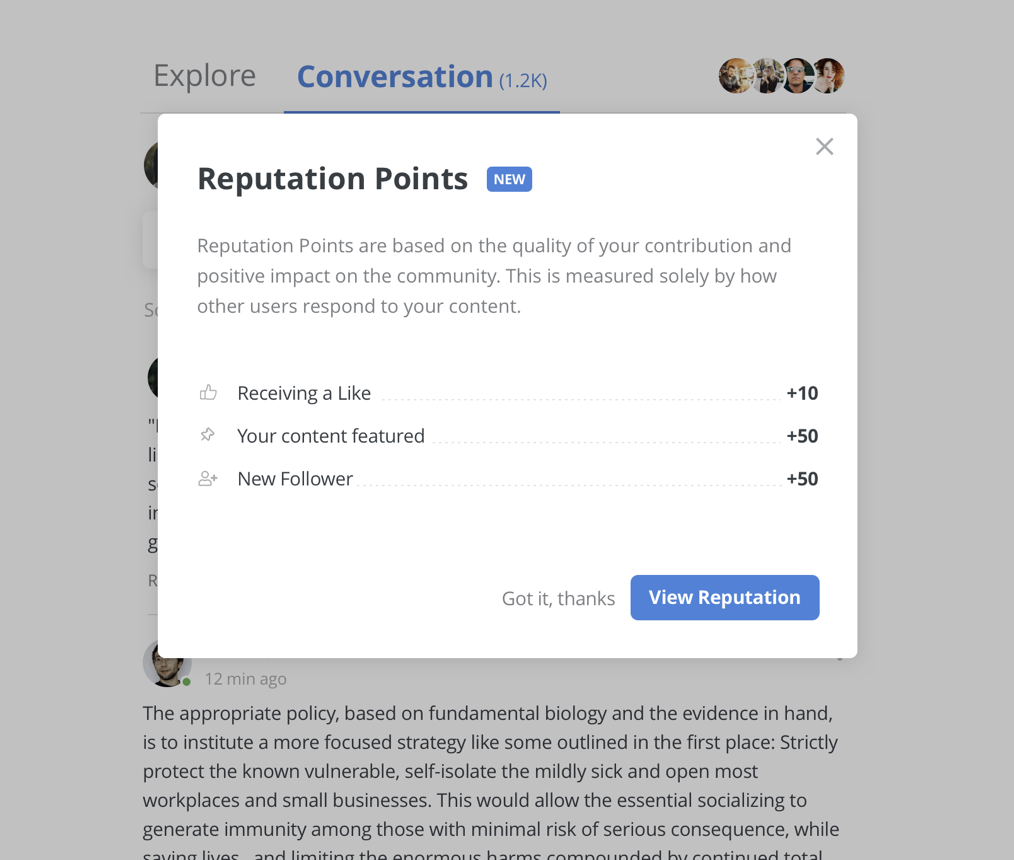

Each community should decide what’s best for them. We do not dictate the ideal standards for quality — each community chooses their own. So, our reputation scoring system is solely based on feedback provided by the fellow community members.

The more positive impact a user has on the community, the more points he/she gains. Reputation scores are transparent and can be seen on users’ profiles. So, in a sense, the points are a measure of how much the community trusts a user — and trust plays a major role in allowing online communities to thrive.

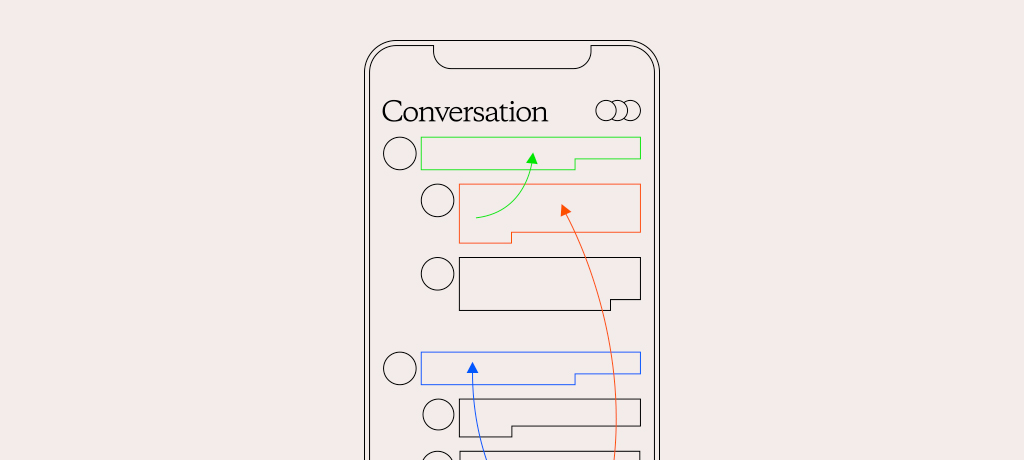

The need to be heard is the strongest psychological motivation we can use to incentivize our commenters to behave better. In our user survey, top users stated that they are most bothered by the fact their comments are quickly buried and not seen. And the vast majority of users only read the comments at the very top. Using the reputation as a weight in the sort algorithm, we showcase or expose more content from trusted members of the community at the top of the stack. To ensure new member contributions are not buried within the sorting algorithm, we also utilize the ‘likes’ and ‘dislikes’ ratio to provide exposure for new contributors.

Recognition is a never-ending game. There is no limit on the points a user can gain. The more reputation one gains, the more privileges they receive. Publishers can decide what kind of privileges they want to give to their users based on reputation, whether allowing top users to start their own conversations or even moderate fellow community contributions. Also, publishers can use our ‘Featured Comment’ option to pin the meaningful contribution on top of the conversation.

When we create an environment where people understand that quality is valued and compensated, it not only builds a positive habit but additionally deepens their relationship with the host of the conversation. Users are influenced to register, create personal profiles, have an identity so they can grow their influence over the community.

What’s next?

When we surveyed our partners and users to identify common issues and wishes to build better online communities, we uncovered few interesting facts:

- 17.5% of respondents stated that they were bothered by the fact that the article and discussion quickly becomes outdated.

- 22.5% said not having the opportunity to discuss with experts or authors outside of an article as an issue.

What if you had one audience engagement platform that could measure online safety and combine the article conversations with a feed/forum like experience, bring together similar conversations from across the site and provide an opportunity for journalists/editors to directly interact with their users?

Stay tuned for more!