Since our early days, we have been very focused on ensuring complete safety for our partners. We felt one of the main reasons publishers had given up on giving their users a voice came down to safety. A 2014 piece by Digiday, titled Why some publishers are killing their comment sections, explains how some publishers had simply “thrown in the towel on comments”.

But we didn’t give up on our mission to inspire and elevate quality conversations online. We believed in our ability to build tools and technologies that will allow those hesitant publishers to give their audience a chance – without having to fear their community will be taken over by trolls. We continually invested in building a robust moderation platform that would help publishers reap the benefits of having a healthy, engaged onsite community. Today, some of the biggest, most respectable brands on the open web use our platform to give hundreds of millions of their readers a voice, and we take great pride in it.

Our moderation platform has a multi-layered protection mechanism, which is powered by machine learning technology, AI, trusted API integrations such as Google Perspective and an in-house content moderation algorithm – all built to achieve one goal – to identify behaviors that violate the host’s Community Guidelines, and thus put the brand and the community at risk.

As we continued to support publishers, we realized in order to achieve our mission we needed to support the society at large, as well. We believe people are inherently good, at their core. They want to consume content, hear and be heard. We spent the last couple of years talking to users across the open web about their needs and we consistently heard that they yearned for transparency. People want to know if and when they’re doing something wrong, so they can improve and still be part of a healthy discussion.

For them, we are releasing today a new moderation experience that puts transparency first. We call it “Clarity Mode”. Clarity Mode is like bulletproof glass — You can always see what’s beyond the glass but breaking it will require some heavy lifting.

Clarity mode helps you do three important things:

1. Set the ground rules

2. Provide feedback to drive behavioral change

3. Prevent abuse

Set the ground rules

The first step towards hosting better conversations is setting clear ground rules. Our moderation platform allows publishers to determine their sensitivity for incivility and define the rules for what is expected out of their community. Using our new Community Guidelines product, publishers can now easily communicate their rules to the users at the outset.

The guidelines product has two parts:

Guidelines brief – a one-liner located in the main conversation view (we particularly love Refinery29’s “Start a discussion, not a fire. Post with kindness”).

Guidelines description – a full description of the community guidelines which will be linked from the guidelines brief.

For your convenience we provide our recommended guidelines, but of course you can easily customize these to be in your voice and meet your needs.

Community Guidelines is now available to all partners. In your admin panel navigate to Settings > Policy and look for the Community Guidelines toggle at the bottom of the page.

Communicate and Provide Feedback

If we want people to behave better, they should know when they make a mistake and how to correct it. That’s why we are introducing Clarity Mode’s significant user-facing feedback features:

Real-time Feedback

The real-time feedback feature gives users an opportunity to change their message before they submit, if their content is suspected to break the Community Guidelines.

This feature is making use of the nudge concept, a known theory in behavioral sciences that proposes positive reinforcement and indirect suggestions as ways to influence the behavior and decision making of groups or individuals.

Every time a user submits a comment, it goes through our automatic moderation algorithm. If the system identifies the content as risky or is misaligned with the Community Guidelines, a notification is presented to the user urging them to take another look at what they wrote and consider editing it.

After carefully testing this on thousands of conversations in the past few weeks, we learned the vast majority of users choose to amend their comment into a more positive contribution when they are nudged.

Message Feedback

When a user submits their comment, they now get immediate feedback on the status of their message. If the comment was sent to the moderation queue or rejected automatically, users get an explanation of what happened and are directed to the Community Guidelines where they can check what’s allowed and what isn’t.

Users can also check their personal profile anytime to see their comment history and the status of each and every comment they’ve made.

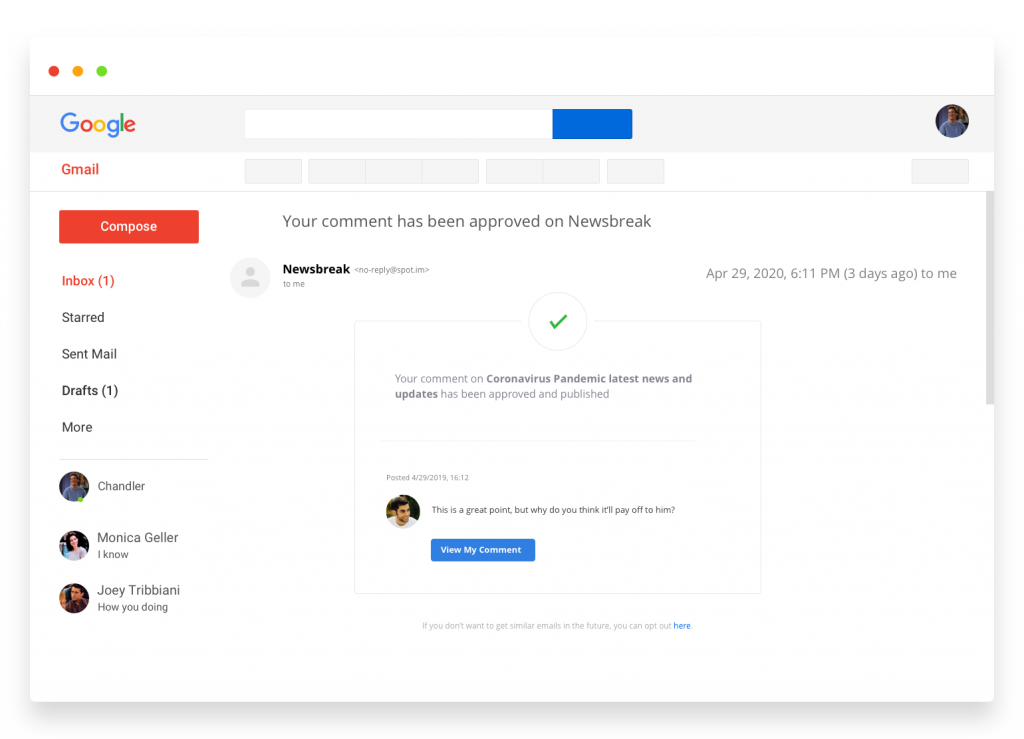

Email Notifications

Comment status might change after the user has already left the site. Pending comments can be approved or rejected, and sometimes auto approved comments can also be rejected by a moderator (or vice versa).

From now on, when this happens users will get a notification, inviting them to jump back to the discussion or try posting again if their comment was not published.

Prevent Abuse

While we stand for complete transparency, which is now the default behavior with these new features — some users might try to take advantage of this new experience. Trolls will try to abuse the system by posting their rejected comments over and over again.

To prevent this, we are introducing the auto-suspension feature. When a user tries to post a rejected comment multiple times, they will be automatically suspended from creating any further comments in that specific conversation. The default threshold that triggers the suspension is three rejections, but this threshold can be configured by the partner.

What’s Next?

The initial data from these features reinforces our belief that — with the right encouragement, everyone can be a good conversationalist. As these tools provide more transparency and education throughout the user engagement journey, it brings us closer to achieving few key things for our partners:

• Increase comment approval rate and reduce exposure of bad comments

• Reinforce user loyalty and drive new user engagement

• Significantly improve overall community health and engagement

Clarity Mode will be gradually released to our partners over the next several weeks. All the above features can be modified within the admin panel by the partner. Clarity mode settings are located under Settings > Policy > Moderation User Experience.