In 2021, we’ve already seen the consequences of disinformation and misinformation on social media play out in the news several times over—from the attacks on the U.S. Capitol to vaccination opponents blocking the entrance of a COVID-19 vaccination hub in L.A.

But simply reading a few articles containing false information doesn’t radicalize people and cause dangerous behavior. The true harm lies within the continued exposure to this content and the cognitive biases that form.

Social media exacerbates this problem. Algorithms favor clicks over quality, and there is little accountability for much of the content that is created and shared. In fact, misinformation on social media is 70% more likely to be retweeted than the truth.

While there is no silver bullet for stopping the spread of misinformation on social media, we can seek out healthier environments where we can express ourselves online where accountability and quality come first.

That’s why we believe that publisher-hosted communities are essential in finding a path forward in the fight against misinformation.

In this post, we’ll break down the primary ways that social media fuels the spread of misinformation. Then, we’ll look at how some of the elements of publisher-hosted communities could help us overcome these challenges.

Accountability is absent on social media platforms

Part of the problem with misinformation and social media platforms is that anyone can create and share content—zero credentials required.

Content, once shared on social media, is removed from its original context. This places misinformation alongside real news in the timeline, blurring the distinction. Additionally, because content is shared, remixed, re-shared, repurposed, and re-uploaded, sources are often obscured—and flimsy proof that would never fly in a newsroom too often makes for salacious, irresistible, and highly shareable headlines.

In that way, social sharing resembles a game of telephone: when content is shared a thousand times over, it’s hard to verify the credibility of the original creator. The message itself can even change as others can add their own spin as they share.

The average user won’t spend time tracking down and verifying every single item in their feed, either. It’s natural Confirmation Bias: many people will readily accept information they see on social media as true, so long as it already fits in with their existing beliefs. Add to that a lack of journalistic standards for that information, and you can see how easy it is to jump to a mistaken conclusion—and then share that misinformation on social media widely.

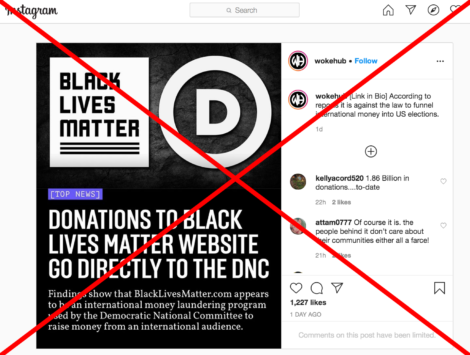

In 2020, a widely debunked and wholly disproven rumor circulated about Black Lives Matter. The rumor stated that donations made to Black Lives Matter (a 501(c)3 non-profit organization) were actually, not-so-secretly, funneled directly to the coffers of the Democratic Party. The posts claimed that, because Black Lives Matter used ActBlue Charities as their fundraising platform, and many Democrats use ActBlue to collect donations, that all donations to BLM were being used to help Democrats get into office.

Of course, this was entirely fabricated. But thanks to the fact that the average user likely didn’t have a working knowledge of how non-profit organizations collect and distribute funding – and many who disapproved of Black Lives Matter already suspected the group to be an arm of the Democratic Party, the information neatly fit and confirmed an existing narrative, and seemed plausible to many.

By the time this post ended up in a user’s feed, it had already been re-shared so many times that it was unclear where it originated. Without the ability to hold any one person accountable for the of spread misinformation on social media, falsehoods are more likely to take on a life of their own.

Algorithms can fuel the spread of misinformation on social media

In addition to a lack of accountability, social media algorithms can fuel the spread of misinformation.

One reason is because, in these environments, it’s easy to confuse popularity with quality. Bogus articles are surfaced to the top of our feeds because they’ve been shared and liked by our friends—not because they comprise award-winning content. Because these platforms want users to stay on-site for as long as possible, they continuously serve up content recommendations to users that help them meet that goal.

That’s how clicking on just one article riddled with conspiracy theories can lead a user down a rabbithole of similar articles. This continued exposure can lead to something experts call complex contagion, or the concept that the more we are exposed to an idea, the more likely we are to adopt and reshare it.

So not only do users on social media have little control over the content they’re exposed to, that content can also have a lasting psychological impact.

Finding a way forward with publisher-hosted communities

As a society, we need the ability to share ideas online—but there needs to be a baseline for accountability, safety and civility. We think that publisher-hosted communities illuminate a path forward.

Why publisher-hosted communities?

First and foremost is accountability. Unlike social media platforms, the content on a publisher’s website is held to a stricter set of standards, and the people who share that content (the journalists) are easily identifiable. Journalists and writers are labeled as such, so they can be held accountable for the content they create. A journalist’s entire career hinges upon the trust they build with their readers—they need to fully own the content they publish. And if something doesn’t add up? The publisher can—and should— be held accountable, too.

Another reason why the conversations within publisher-hosted communities are healthier is because they contain context. Because these discussions are focused on the content itself, it’s more likely that the people in the conversation have actually read the article on the page where they’re engaging. Because of more effective moderation, it’s less likely that comments will devolve into a trolling situation.

On a publisher’s website, the power is in the hands of the user. They can seek out, and choose to continue reading the content that interests them—as opposed to an algorithm deciding for them, and potentially exposing them to problematic content.

Finally, publishers can set their own standards for moderation—they decide what is permissible and what isn’t. In this environment, the overall quality of the conversations is higher. This encourages more people to join the conversation, continuing the cycle of safe and civil discourse.

We all deserve a healthy, clickbait-free zone where we can freely exchange ideas, without the constant threat of misinformation undermining our conversations. When publishers become the hosts of online commentary, they have the power to create a high-quality environment for online conversations—a more civil web.