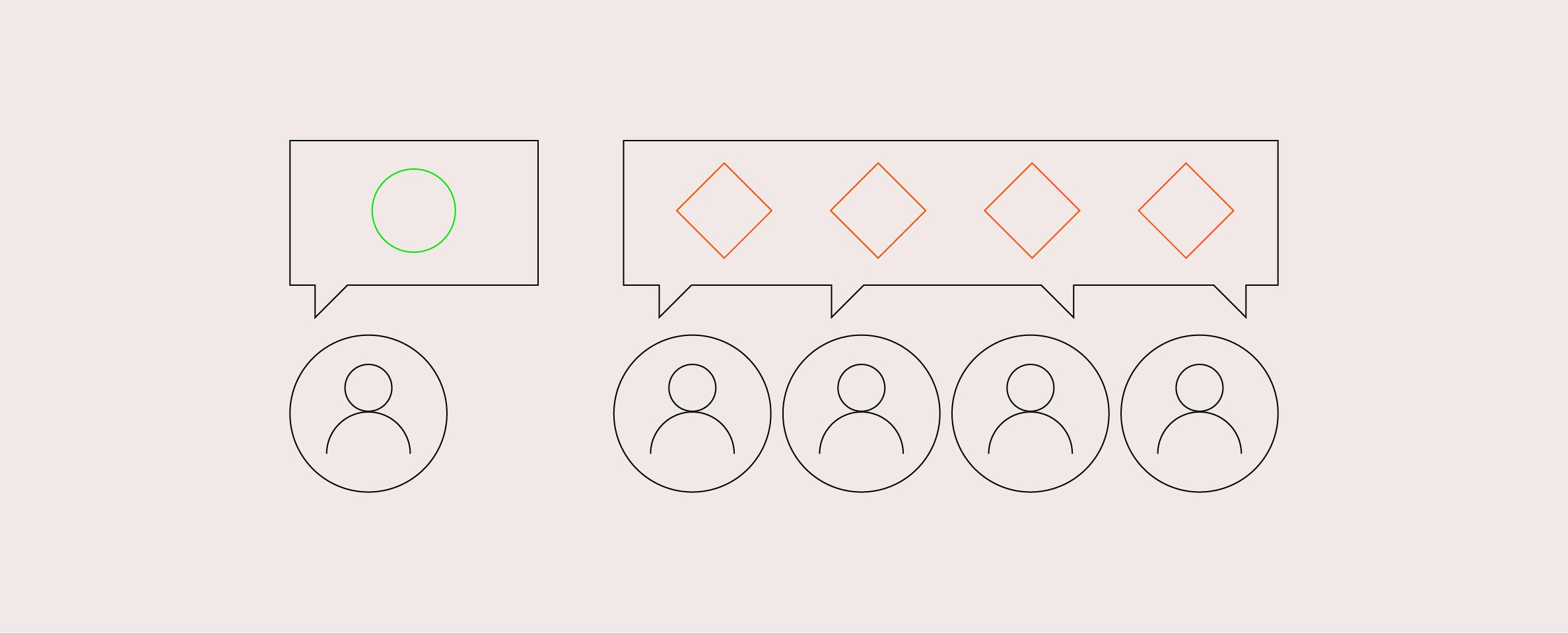

It’s no secret that, on today’s internet, we’re siloed—stuck talking to people who agree with us already, usually about the blindness and/or idiocy of those unfortunate people trapped in the other silo. When leakage occurs––when someone from one silo ends up interacting with someone from a different silo—the result, more often than not, is catastrophic, at least on social media. Resident of Silo #1 tells Resident of Silo #2 that they’re brainwashed; Resident of Silo #2 says that, actually, sorry, no, I believe you’re the one that’s brainwashed. Then they start saying stuff we’d rather not repeat. Things can get ugly at an astonishing speed.

Needless to say, this is a genuine shame. No question, there’s value in having one’s viewpoint validated—back-patting is not a crime. But there is also real value in having your viewpoint tested. The benefits of good-faith back-and-forth are numerous. The problem is that, on much of the internet, good-faith debate is very nearly impossible to find.

As we now know, platforms like Facebook are expressly designed to stoke discord, highlighting the posts most likely to drive an extreme emotional reaction from users. Is there a way to preserve dissent online without inviting toxicity? The answer, we’d argue, is yes. It just depends on good moderation.

Why dissent matters

Before delving into what moderation can do, it’s worth expanding on some of the benefits of dissent. The fact is that when one side—any side—spends too much time talking to itself, it risks losing touch with the outside world. Assumptions go unquestioned, and groupthink takes hold. Dissent—whether from members of the community itself, or from members of entirely different communities—can help counteract some of these negative tendencies. It can help people realize flaws or blindspots in their arguments, or open their eyes to dimensions of the issue they might not have even thought about.

More to the point: without dissent, things get boring. When you can instantly guess your community’s reaction to this or that news story; when you hear the same trite remarks, day in and day out; when it feels like everything’s settled, and the task of each day is to repeat and strengthen what you already believe… well, that’s when dissent can make a real difference. Dissent, contrary to its negative associations, can actually keep things interesting.

Of course, the world we’re describing here—in which people with clashing views work jointly towards some version of truth, or at least argue without resorting to caps-lock—depends on good-faith engagement. A vast gulf exists between respectful disagreement and ad-hominem insult-trading.

There are people online who really do want to talk things out, but too often they’re overshadowed by the “bad eggs”—that small but influential subset of people online whose main interest is sowing chaos. This is where moderation can really make a difference.

Moderation can keep things interesting—and safe

OpenWeb’s moderation tools help to keep discourse civil. Our AI/ML-powered tech discerns the content of each comment before it’s posted—it can detect incivility levels, author attacks, spam/abuse, and more. Our tools also track behavior on the user level, allowing us to deemphasize the contributions of users with a history of derailing the conversation. Respectful contributions, meanwhile, are spotlighted, setting the tone for the larger conversation.

That conversation, depending on the subject, might be light-hearted; it might be serious, funny, intense. There may be real disagreements—on the merits of a politician, a pot roast recipe, a streaming show—and these disagreements might lead to lengthy debate. And that, we believe, is a very good thing. It’s part of the fun of being online. The goal—wholly achievable through OpenWeb’s technology—is to foster that spirit of debate without letting it lapse into toxicity. You want to keep things safe for everyone involved, without stifling the conversation.

The community plays a role in keeping things safe, too: OpenWeb offers users easy ways to flag comments that they feel are out of line, empowering them to police the boundaries of their own communities. When enough people have flagged a comment, OpenWeb’s human moderation—operational 24/7, 365 days a year—will promptly take a closer look.

A vibrant community can make a major difference

The kinds of conversation that all this moderation facilitates can create significant value for publishers. Lively conversation keeps users coming back: they come to know and like regular commenters, and become regular commenters themselves. That means more return visits, more time-on-site, more registrations, and more paid subscriptions. It means more loyalty to your brand and content, and to the community thriving around both. And it means more first-party data, which you can use to more precisely target your readership. (It goes without saying that, with the imminent demise of third-party cookies, first-party data is more important than ever.)

With users growing more and more sick of the pointless strife on social media, publishers have an excellent chance to position themselves as an alternative—a place where people can engage in friendly debate without insulting or doxxing one another. In an environment like that, even the most hardened troll just might find themselves contributing something useful.