A person reads an article, and has something to say about it. The person types out their comment, smooths out the typos, and clicks ‘submit.’ In the traditional model of online commenting—still in wide use today—that comment instantly appears, unvetted, beneath the article in question, slotting chronologically into a growing stream of comments. Alternatively, there’s the social media model, where that comment is automatically evaluated for its outrage-potential and—if it looks like it’ll make people angry—shown to as many people as possible.

Maybe unsurprisingly, both of these models have mostly resulted in chaos. At OpenWeb, we believe there’s another way. Using a rigorous process, our tools put each and every comment through multi-layered moderation designed to keep online communities healthy and to help publishers grow their audiences. How does it work?

Step One: Linguistic Analysis

Our Incivility Filters are the first line of defense. The moment a comment is posted, it’s swarmed by a battery of linguistic security guards. Its semantic patterns are analyzed (i.e., does this comment resemble other comments that are known to be negative?); its incivility levels are measured. The comment is examined up and down for any indication that it attacks the article’s author, or advocates noxious ideologies like white supremacy. Its IP is inspected, and matched against a blocklist. Obvious spam and abuse, it goes without saying, are turned away at the door.

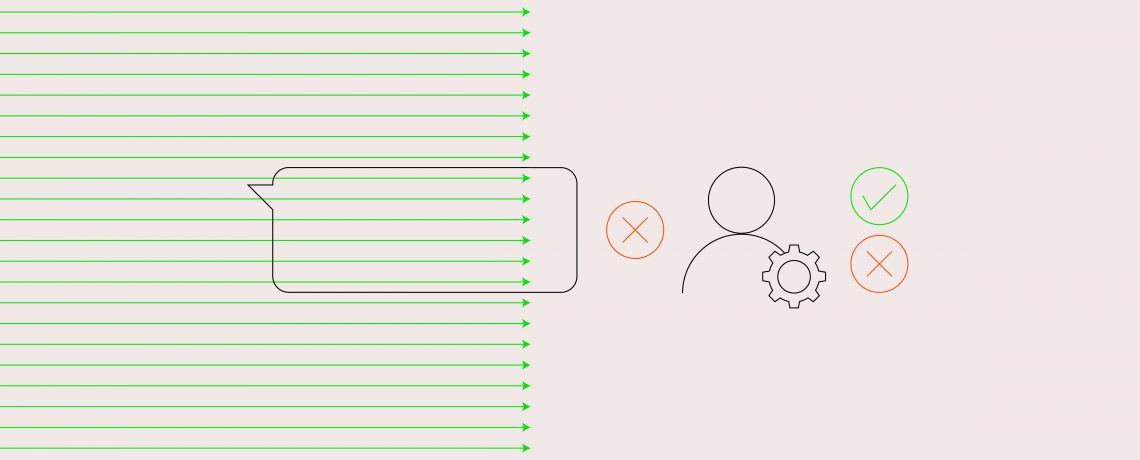

Step Two: Civility Profiles

Once the comment itself is vetted, we move on to the commenter. OpenWeb remembers each user’s past contributions, and uses these to build up a predictive profile. If a user, in the past, has picked a fight with fellow commenters, or made cruel remarks about an article’s author, they will likely have a dismal Civility Profile, and will accordingly be granted less trust. A user with a history of positive, engaged contributions, meanwhile, will be favored by the system. Evaluating these trust levels in conjunction with the linguistic analysis, OpenWeb identifies the highest-caliber comments and ensures that they’re the ones your users see the most.

Step Three: Crowd Signals & Manual Moderation

Now, finally—after passing successfully through several rounds of security—the comment makes its grand entrance on the stage. But the moderation process doesn’t end there. Instead, the baton is passed from multi-layered moderation learning to your publication’s community. At OpenWeb, we believe that with the right multi-layered tools in place, crowds are perfectly capable of self-regulating. OpenWebOS makes it easy for community members to flag a potentially out-of-bounds comment. When the crowd signal is strong enough, the offending comment is passed along to the final judge: a human moderator. Our staff of human moderators is on the job 24/7, 365 days a year. If they determine that the comment violates the guidelines set out by OpenWeb (or by the publisher—with OpenWeb, comment moderation is customizable), it’ll be promptly removed.

You don’t need a psych degree to know that people take cues from those around them. If you walk into a party, and everyone’s hollering and cursing and hurling abuse, a message is transmitted—namely, “being a jerk is okay here.” Conversely, it takes a very rare personality type to enter a quiet library or cafe and start shouting. When strangers come together in a public space, they naturally establish behavior norms. By using multi-layered content moderation to draw attention to positive, thoughtful contributions, OpenWeb helps to establish healthy norms from the very start of the conversation. The end result is intelligent, engaged, decidedly non-toxic discourse—because as we’ve learned, positive comments inevitably generate more positive comments.

Healthy Conversation is Good For Publishers

Polite, non-toxic conversation is a good thing in and of itself—but it doesn’t hurt that it helps a publisher’s bottom line, too. The fact is that when users engage in quality conversation, they spend more time on-site and are far likelier to make a return visit (or, better yet, register as a formal user of your site, a functionality available via OpenWeb Identity). More users mean more conversation, which means, inevitably, more first-party data—a necessity, in the cookieless world that is looming on the horizon. Through our partnership with LiveRamp, OpenWeb Activate allows publishers to activate and make the most of first-party data.

But maybe the most useful byproduct of a vibrant comment section is what it can tell you about what your audience wants to read. AI and ML are great tools for determining whether a given comment potentially breaks the rules, but it is also great for determining what a given comment says about your content. Using our unique sentiment and emotion analysis, OpenWeb Activate can make sure your content is resonating, and provide you with real-time, actionable insights into trending topics, articles of interest, engagement levels, and more.

Curated Conversation Makes Everyone Happy

As you read this, countless people are engaged in conversation via OpenWeb. The reason these conversations haven’t been derailed, or prevented from getting started in the first place, is that OpenWeb’s multi-layered tools curated the environment—created a space in which the odds of an unpleasant encounter are drastically minimized. The users, of course, are barely aware of this: they’re just enjoying themselves. And in the end, that’s what we think comment moderation should be: unobtrusive, beneficial to users and publishers, and conducive, ultimately, to a very fun time.